Naive Bayes Fine Tuning¶

The Fine-Tuning Naive Bayes (FTNB) function is based on the algorithm of Khalil El Hindi (Fine tuning the Naive Bayesian learning Algorithm, in AI Communications, 27(2):133–141, 2014). The aim of the FTNB algorithm is to improve the classification accuracy of a Naïve Bayes classifier by adjusting some the entries of the conditional probability distributions of the feature nodes. Iterating over the training cases, we compute the classfication of each case according to the model. If the classfication is incorrect, we change some of the conditional probabilities used in this classification to increase the likelihood of a correct classification. The conditional probabilities are updated after each incorrectly classified case implying the order of the cases matters.

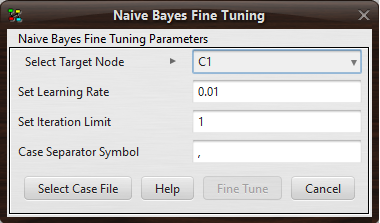

After a full iteration over all cases, the improvement of the model is computed. The FTNB algorithm continuous until no improvement occurs (or a few iterations after no improvement). The FTNB algorithm assumes that the model is a Naïve Bayes and takes four arguments: 1) the target node, 2) a learning rate, 3) an upper limit on the number of iteration after no improvement, and 4) a case separator symbol (the separator used in the data file). Figure 1 displays the NB Fine Tuning dialogue. It can be found in the network menu item under the learning submenu.

Figure 1: Naive Bayes Fine Tuning Dialog.¶