D-Separation (Theory)¶

The rules of d-separation, due to Pearl (1988), can be used to determine the dependences and independences among the variables of a Bayesian network. This is very useful for e.g. (structural) model verification and explanation. It is possible to perform d-separation in HUGIN when the network is in run-mode.

To use the rules of d-separation, one must be familiar with the three fundamental kinds of connections

X \(\rightarrow\) Y \(\rightarrow\) Z (serial connection): Information may be transmitted through the connection unless the state of Y is known. Example: If we observe the rain falling (Y), any knowledge that there is a dark cloud (X) is irrelevant to any hypothesis (or belief) about wet lawn (Z). On the other hand, if we don’t known whether it rains or not, observing a dark cloud will increase our belief about rain, which in turn will increase our belief about wet lawn.

X \(\leftarrow\) Y \(\rightarrow\) Z (diverging connection): Information may be transmitted through the connection unless the state of Y is known. Example: If we observe the rain falling (Y) and then that the lawn is wet (X), the added knowledge that the lawn is wet (X) will tell us nothing more about the type of weather report to expect from the radio (Z) than the information gained from observing the rain alone. On the other hand, if we don’t known whether it rains or not, a rain report in the radio will increase our belief that it is raining, which in turn will increase our belief about wet lawn.

X \(\rightarrow\) Y \(\leftarrow\) Z (converging connection): Information may be transmitted through the connection only if information about the state of Y or one of its descendants is available. Example: If we know the lawn is wet (Y) and that the sprinkler is on (X), then this will effect our belief about whether it has been raining or not (Z), as the wet lawn leads us to believe that this was because of the sprinkler rather than the rain. On the other hand, if we have no knowledge about the state of the lawn, then observing that it rains will not affect our belief about whether the sprinkler has been on or not.

Two variables X and Z are d-separated if for all paths between X and Z there is an intermediate variable Y such that either

the connection is serial or diverging and Y is instantiated (i.e., its value is known), or

the connection is converging and neither Y nor any of its descendants have received evidence.

If X and Z are not d-separated, they are d-connected. Note that dependence and independence depends on what you know (and do not know). That is, the evidence available plays a significant role when determining the dependence and independence relations.

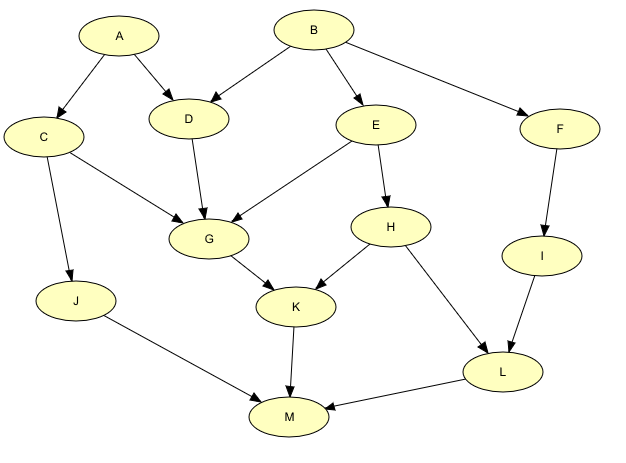

Let us consider the DAG in Figure 1. Using the above rules, we find e.g. that

C and L are d-separated, as each path between C and L includes at least one converging connection.

C and L are d-connected given K (i.e., the value of K is known), as there are now several paths between C and L where the converging connections contain an instantiated variable (e.g., C \(\rightarrow\) G \(\rightarrow\) K \(\leftarrow\) H \(\rightarrow\) L) or a descendant (K) of the node in the converging connection has been instantiated (e.g., C \(\leftarrow\) A \(\rightarrow\) D \(\leftarrow\) B \(\rightarrow\) E \(\rightarrow\) H \(\rightarrow\) L).

Figure 1: A sample network.¶

As mentioned in Introduction to Bayesian Networks, an alternative to using the d-separation rules is to use an equivalent criterion due to Lauritzen et al. (1990):: Let A, B, and C be disjoint sets of variables. Then

identify the smallest sub-graph that contains A B C and their ancestors,

add undirected links between nodes having a common child, and

drop directions on all directed links.

Now, if every path from a variable in A to a variable in B contains a variable in C, then A is conditionally independent of B given C.